Scaling the Ceph file system

1. Problem statement and general practice

Ceph storage platform developers recommend capacity planning and early response to prevent the growing file system from reaching maximum storage capacity. That is why scaling your file system could become a routine operation.

| We recommend checking the cluster regularly to see if it is getting close to its maximum capacity. When the cluster is almost full, add one or more Object Storage Daemons (OSDs) to increase the capacity of the Ceph cluster. |

2. How Ceph file system scaling works

Within the registry management Platform, Ceph scaling is performed exclusively through the Origin Kubernetes Distribution (OKD) platform and the built-in Ceph operator using the operator pattern.

| The operator pattern approach enables you to extend the OKD platform’s behavior via operators, which are software extensions that use custom resources to manage applications, their components, and configurations. Operators follow Kubernetes principles, notably the automation and control loop. |

| Reconciliation, or bringing to an agreed state, is part of the self-healing capabilities of the OKD platform, which involves bringing the current state of the configuration to the desired state. |

There are two ways you can extend the Ceph file system: by adding disks to the current virtual machines and by adding new virtual machines.

2.1. Adding disks to the current virtual machines

To create new disks for the virtual machines and add them to the Ceph pool, perform these steps:

-

Sign in to the OKD web console.

-

Go to Storage > Overview > OpenShift Container Storage > All instances > ocs-storagecluster.

-

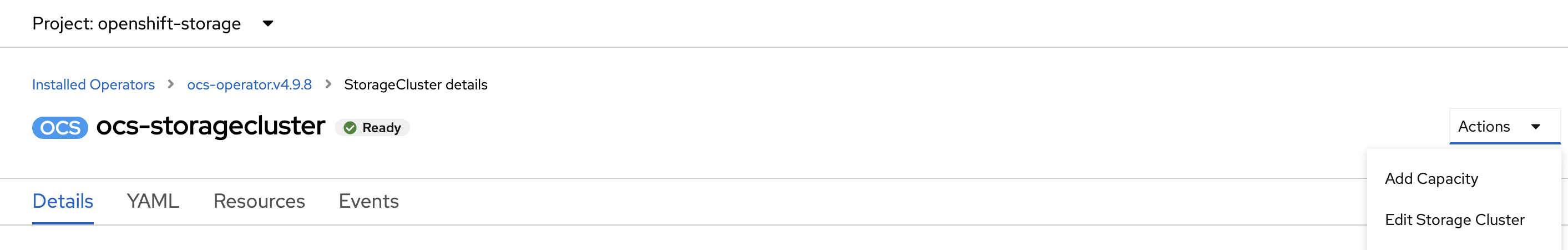

Expand the Actions menu and select

Add Capacity. For example:

-

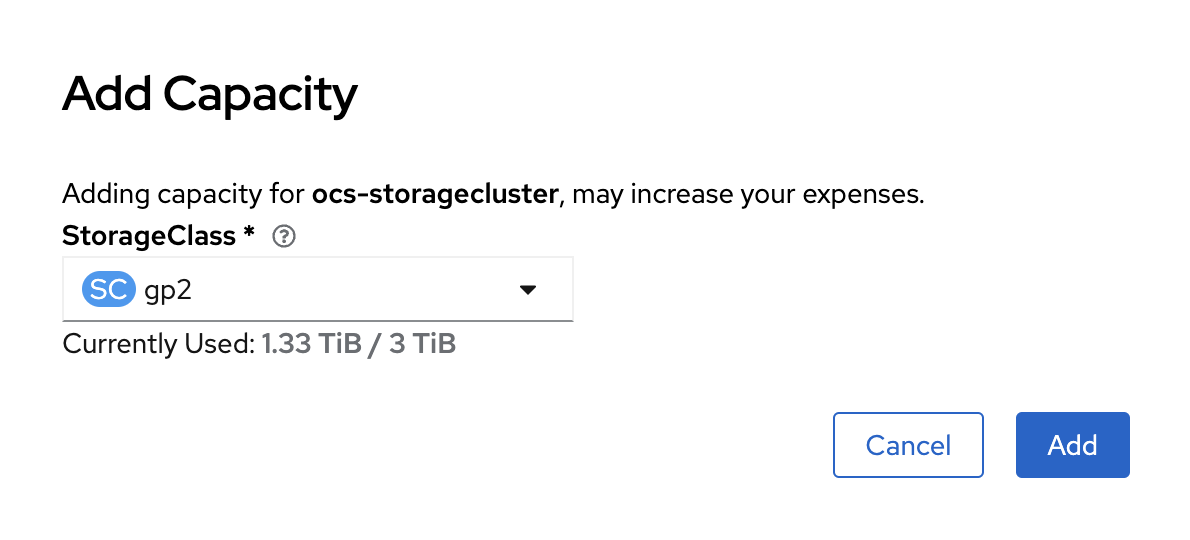

In the Add Capacity window, click

Addand wait until the new disks are created and added to the Ceph pool.

To check the status of the newly created OSDs, use the following command in the ceph-operator pod:

ceph --conf=/var/lib/rook/openshift-storage/openshift-storage.config osd tree

| You can run multiple OSDs on the same virtual machine, but you must ensure that the total bandwidth of the OSD disks does not exceed the network bandwidth required to support the process of reading or writing data to those disks. |

2.2. Adding new virtual machines to the Ceph MachineSet

To add new virtual machines to the Ceph MachineSet, perform these steps:

-

Sign in to the OKD web console.

-

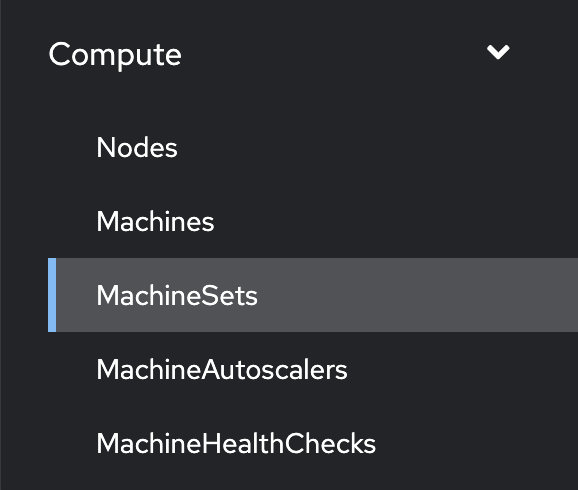

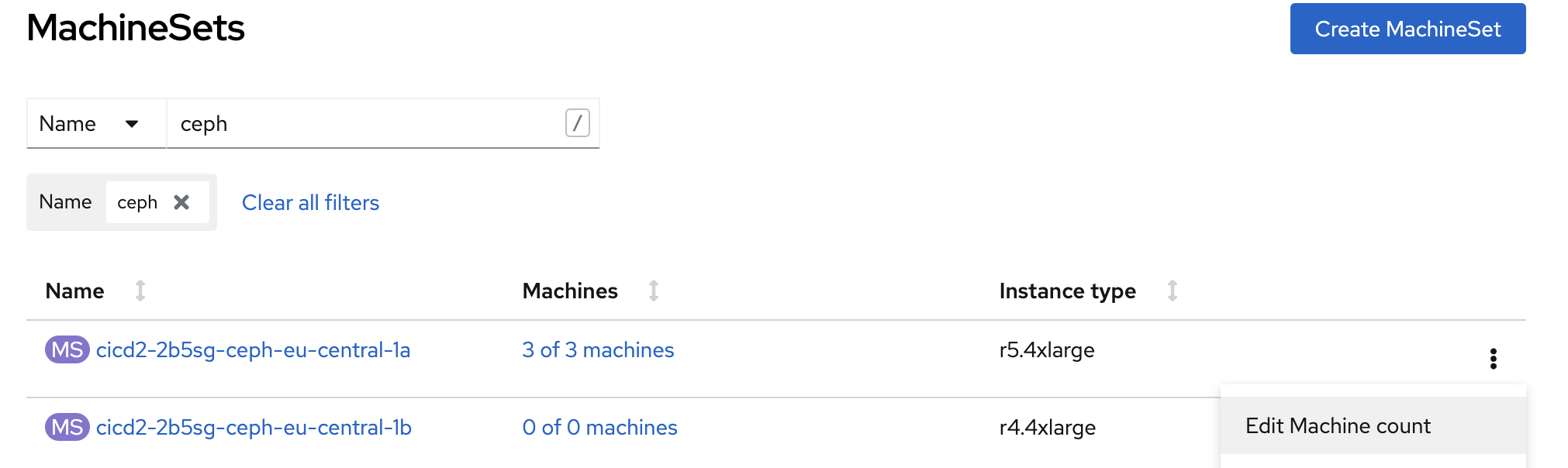

Go to Compute > MachineSets.

-

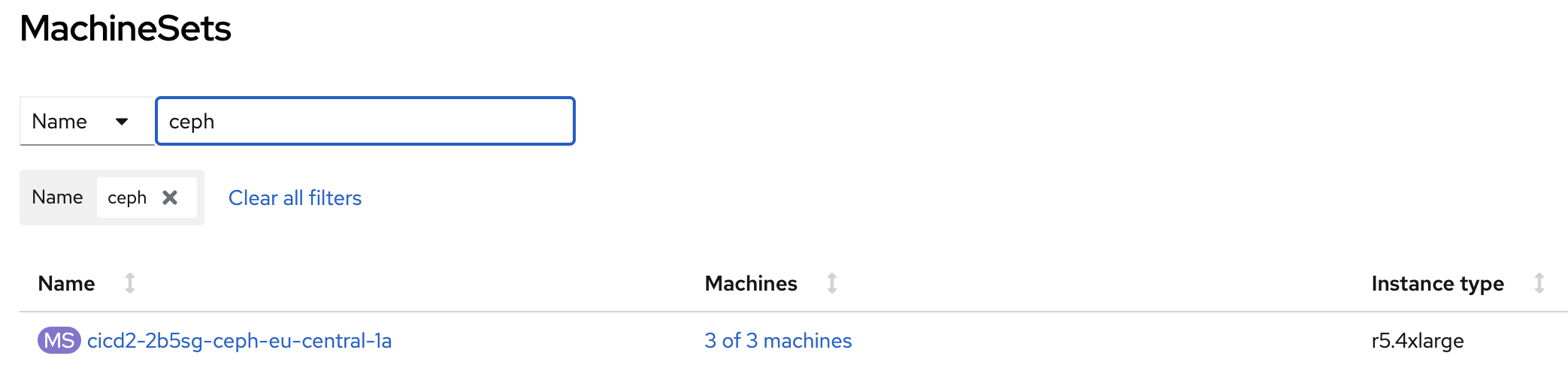

Find the required MachineSet. For example:

-

Expand the actions menu and select

Edit Machine count.

-

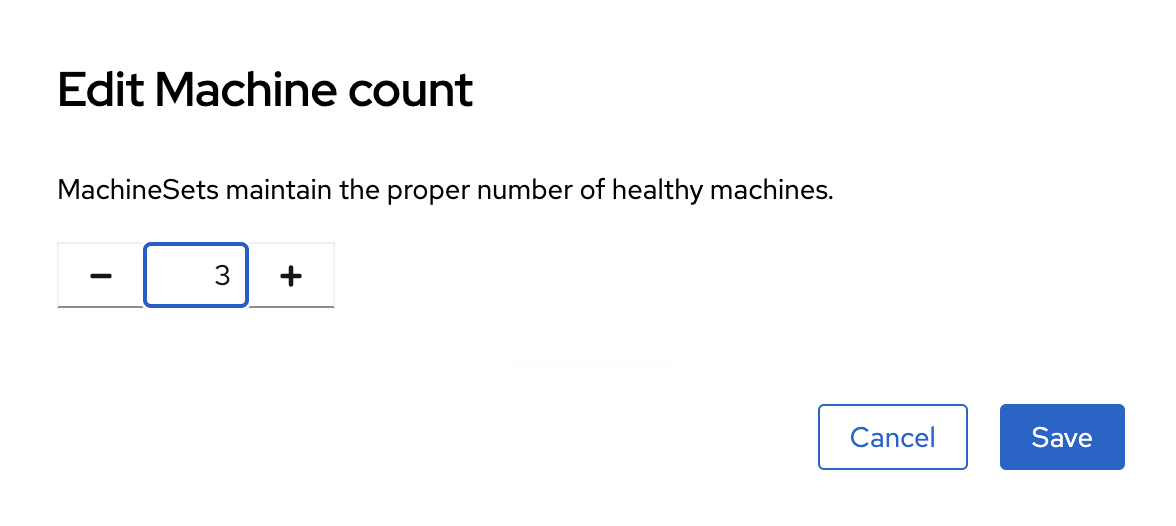

Specify the desired amount of machines and click

Save.

-

Wait until the status of the new virtual machine changes to

Running. Once it does, it becomes available to Ceph, and you can add new disks and OSDs to it.

|

After completing all the steps, check the current status of Ceph either in the OKD web console or using the following command in the ceph-operator pod: ceph --conf=/var/lib/rook/openshift-storage/openshift-storage.config health detail |

3. How Ceph buckets scaling works

Each Ceph bucket dynamically expands when files are added and can occupy all available space in the Ceph File System (CephFS). For scaling, follow the steps described earlier in this topic.

4. Changing the Ceph’s replication factor

To change the replication factor on the deployed OKD cluster, perform these steps:

-

In the OKD web console, open the .yaml file with the description of the

StorageClusterresource and change the following section:From:

managedResources: cephBlockPools: {}To:

managedResources: cephBlockPools: reconcileStrategy: init -

In the OKD web console, open the .yaml file with the description of the

CephBlockPoolresource and change the replication factor in thereplicated > sizefield:spec: enableRBDStats: true failureDomain: rack replicated: replicasPerFailureDomain: 1 size: 3 targetSizeRatio: 0.49 -

Wait until Ceph applies your changes.